Meta Oversight Board to Probe Celebrity Deepfake Porn Cases

1 min readCelebrity Deepfake Porn Cases Will Be Investigated by Meta Oversight Board

In recent years, the rise of deepfake technology has become a cause for concern, especially in cases involving...

Celebrity Deepfake Porn Cases Will Be Investigated by Meta Oversight Board

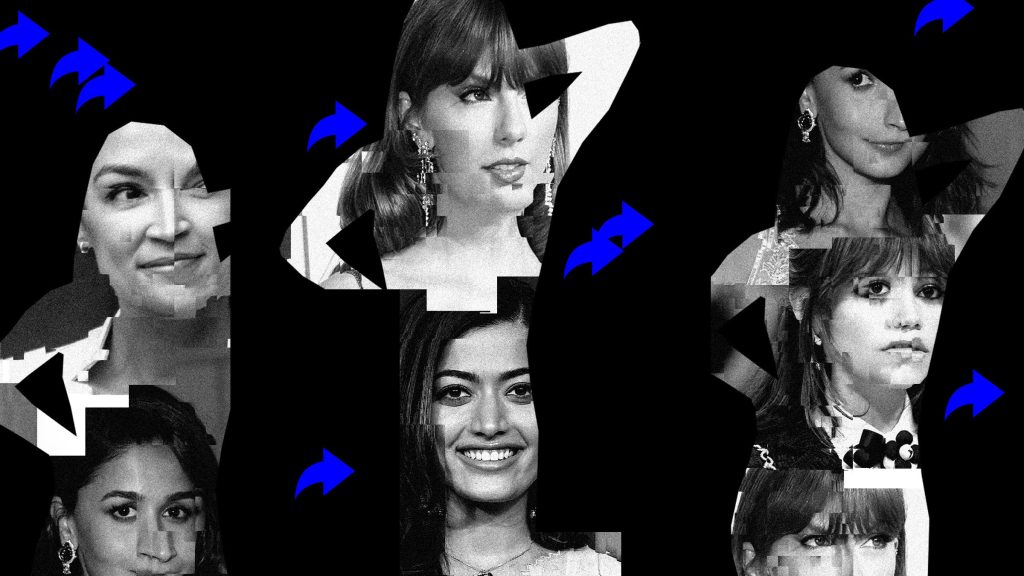

In recent years, the rise of deepfake technology has become a cause for concern, especially in cases involving celebrities. Deepfake porn, in particular, has been a growing issue, with individuals using AI to superimpose the faces of famous people onto pornographic videos without their consent.

To address this issue, Meta, the parent company of Facebook and Instagram, has announced that their Oversight Board will be investigating cases of celebrity deepfake porn. The board, which is made up of independent experts and is tasked with reviewing content moderation decisions made by Meta, will now also focus on cases involving deepfake pornographic material.

This move comes as a response to increasing pressure from activists and lawmakers to take action against the spread of deepfake porn online. Many have argued that these videos can not only damage the reputation and privacy of the individuals depicted but also perpetuate harmful stereotypes and contribute to the objectification of women.

By investigating and potentially removing deepfake pornographic material from their platforms, Meta is taking a step towards combating the misuse of AI technology for malicious purposes. It remains to be seen how effective this measure will be in curbing the spread of deepfake porn, but it is a positive development in the ongoing battle against online harassment and exploitation.